40 one hot encoding vs label encoding

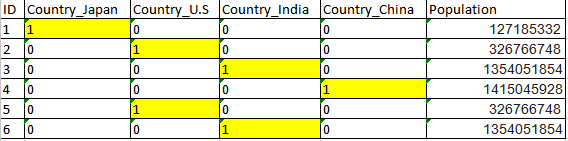

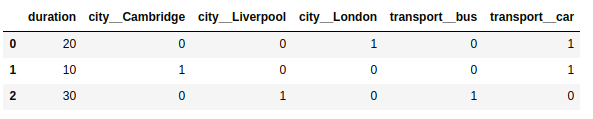

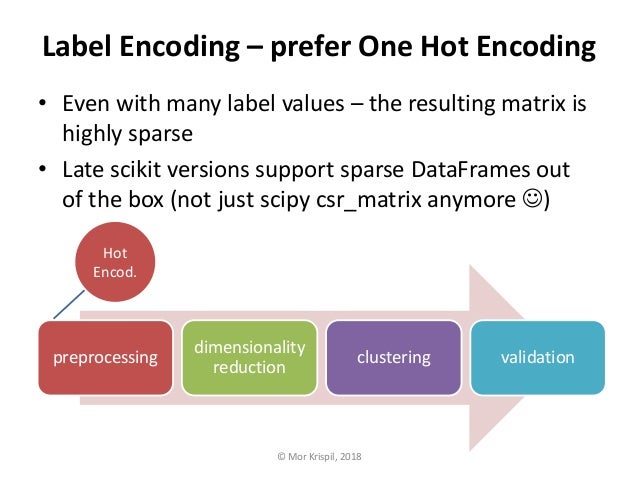

towardsdatascience.com › encoding-categoricalEncoding Categorical Variables: One-hot vs Dummy Encoding Dec 16, 2021 · This is because one-hot encoding has added 20 extra dummy variables when encoding the categorical variables. So, one-hot encoding expands the feature space (dimensionality) in your dataset. Implementing dummy encoding with Pandas. To implement dummy encoding to the data, you can follow the same steps performed in one-hot encoding. One-Hot Encoding vs. Label Encoding using Scikit-Learn What is One-Hot Encoding? When should you use One-Hot Encoding over Label Encoding? These are typical data science interview questions every aspiring data scientist needs to know the answer to. After all, you'll often find yourself having to make a choice between the two in a data science project! Machines understand numbers, not text.

› blog › 2020Categorical Encoding | One Hot Encoding vs Label Encoding Jun 25, 2020 · When to use a Label Encoding vs. One Hot Encoding. This question generally depends on your dataset and the model which you wish to apply. But still, a few points to note before choosing the right encoding technique for your model: We apply One-Hot Encoding when: The categorical feature is not ordinal (like the countries above) The number of ...

One hot encoding vs label encoding

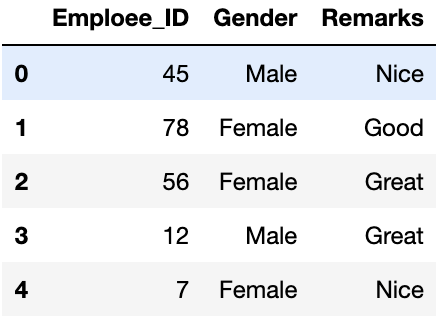

Data Science in 5 Minutes: What is One Hot Encoding? One hot encoding makes our training data more useful and expressive, and it can be rescaled easily. By using numeric values, we more easily determine a probability for our values. In particular, one hot encoding is used for our output values, since it provides more nuanced predictions than single labels. One-Hot Encoding vs. Label Encoding.docx - UNCLASSIFIED... View One-Hot Encoding vs. Label Encoding.docx from DAT 8130 at Algonquin College. UNCLASSIFIED One-Hot Encoding vs. Label Encoding 1. What is Categorical Encoding? 2. Different Approaches to Encoding categorical columns - Label encoding vs one hot encoding for ... The ACCURACY SCORE of various models on train and test are: The accuracy score of simple decision tree on label encoded data : TRAIN: 86.46% TEST: 79.42% The accuracy score of tuned decision tree on label encoded data : TRAIN: 81.74% TEST: 81.33% The accuracy score of random forest ensembler on label encoded data: TRAIN: 82.26% TEST: 81.63% The...

One hot encoding vs label encoding. When to use One Hot Encoding vs LabelEncoder vs DictVectorizor? Still there are algorithms like decision trees and random forests that can work with categorical variables just fine and LabelEncoder can be used to store values using less disk space. One-Hot-Encoding has the advantage that the result is binary rather than ordinal and that everything sits in an orthogonal vector space. What are the pros and cons of label encoding categorical features ... Answer: If the cardinality (the # of categories) of the categorical features is low (relative to the amount of data) one-hot encoding will work best. You can use it as input into any model. But if the cardinality is large and your dataset is small, one-hot encoding may not be feasible, and you m... One-hot Encoding vs Label Encoding - Vinicius A. L. Souza The main reason why we would use one-hot encoding over label encoding is for situations where each category has no order nor relationship. On a ML model, a larger number can be seen as having a higher priority, which might not be the case. One-hot encoding guarantees that each category is seen with the same priority. sklearn.preprocessing.OneHotEncoder - scikit-learn 1.1.1 documentation This encoding is needed for feeding categorical data to many scikit-learn estimators, notably linear models and SVMs with the standard kernels. Note: a one-hot encoding of y labels should use a LabelBinarizer instead. Read more in the User Guide. Parameters. categories'auto' or a list of array-like, default='auto'.

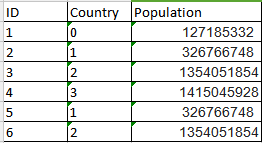

medium.com › analytics-vidhya › target-encoding-vsTarget Encoding Vs. One-hot Encoding with Simple Examples Jan 16, 2020 · Label Encode (give a number value to each category, i.e. cat = 0) — shown in the ‘Animal Encoded’ column in Table 3. ... One-hot encoding works well with nominal data and eliminates any ... Categorical encoding using Label-Encoding and One-Hot-Encoder One-Hot Encoder Though label encoding is straight but it has the disadvantage that the numeric values can be misinterpreted by algorithms as having some sort of hierarchy/order in them. This ordering issue is addressed in another common alternative approach called 'One-Hot Encoding'. What is Label Encoding in Python | Great Learning That is why we need to encode categorical features into a representation compatible with the models. Hence, we will cover some popular encoding approaches: Label encoding; One-hot encoding; Ordinal Encoding; Label Encoding. In label encoding in Python, we replace the categorical value with a numeric value between 0 and the number of classes ... regression - One hot encoding vs apply the average of the label to each ... One issue with target-based encoding is that some of the categories would have a very small number of samples in the training data, e.g., zipcodes with small population. This would make the average target (label) values for those small categories unstable. This leads to over-fitting, which would negatively impact the predictive accuracy of the ...

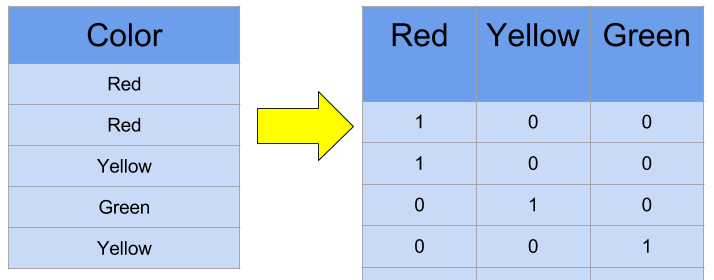

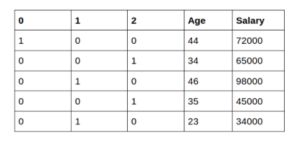

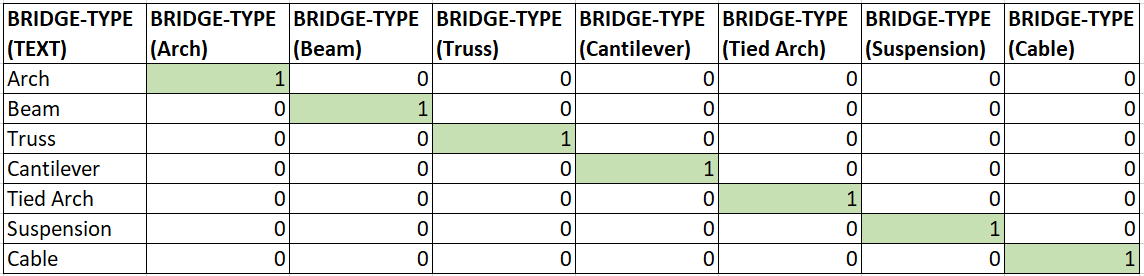

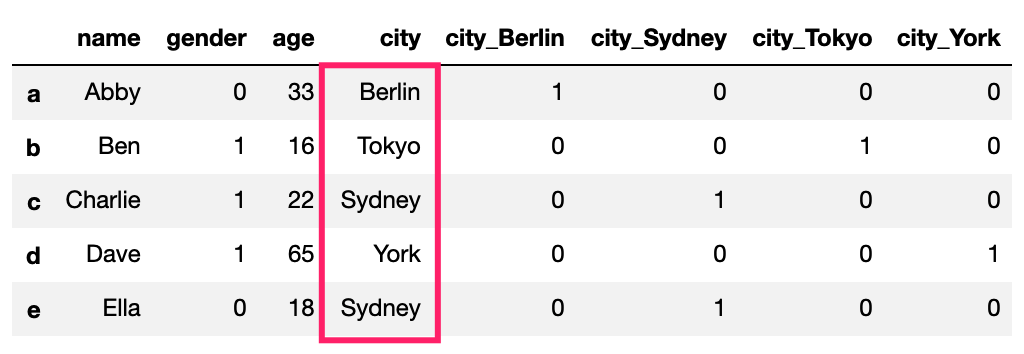

Ordinal and One-Hot Encodings for Categorical Data The two most popular techniques are an Ordinal Encoding and a One-Hot Encoding. In this tutorial, you will discover how to use encoding schemes for categorical machine learning ... Running the example first lists the three rows of label data, then the one hot encoding matching our expectation of 3 binary variables in the order "blue ... › ml-one-hot-encoding-ofML | One Hot Encoding to treat Categorical data parameters Jun 21, 2022 · One Hot Encoding using Sci-kit learn Library: One hot encoding algorithm is an encoding system of Sci-kit learn library. One Hot Encoding is used to convert numerical categorical variables into binary vectors. Before implementing this algorithm. Make sure the categorical values must be label encoded as one hot encoding takes only numerical ... Label Encoding vs. One Hot Encoding | Data Science and Machine ... - Kaggle One-Hot Encoding One-Hot Encoding transforms each categorical feature with n possible values into n binary features, with only one active. Most of the ML algorithms either learn a single weight for each feature or it computes distance between the samples. Algorithms like linear models (such as logistic regression) belongs to the first category. When to Use One-Hot Encoding in Deep Learning? One hot encoding is a highly essential part of the feature engineering process in training for learning techniques. For example, we had our variables like colors and the labels were "red," "green," and "blue," we could encode each of these labels as a three-element binary vector as Red: [1, 0, 0], Green: [0, 1, 0], Blue: [0, 0, 1].

Categorical coding | A hot encoding versus tag encoding The number of categorical characteristics is less, so one-hot encoding can be applied effectively. We apply tag encoding when: The categorical characteristic is. ordinal. (like Jr. kg, Sr. kg, primary school, high school) The number of categories is quite large as one-hot encoding can lead to high memory consumption.

Label Encoding vs One Hot Encoding | by Hasan Ersan YAĞCI - Medium Label Encoding and One Hot Encoding 1 — Label Encoding Label encoding is mostly suitable for ordinal data. Because we give numbers to each unique value in the data. If we use label encoding in...

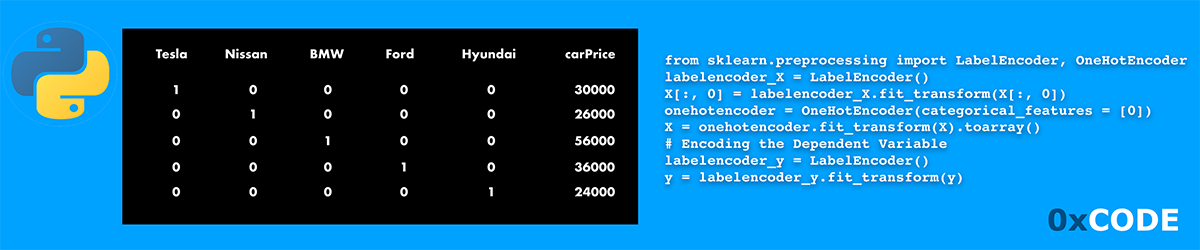

towardsdatascience.com › choosing-the-rightChoosing the right Encoding method-Label vs OneHot Encoder Nov 08, 2018 · Let us understand the working of Label and One hot encoder and further, we will see how to use these encoders in python and see their impact on predictions. Label Encoder: Label Encoding in Python can be achieved using Sklearn Library. Sklearn provides a very efficient tool for encoding the levels of categorical features into numeric values.

› blog › label-encoder-vs-one-hot-encoderLabel Encoder vs One Hot Encoder in Machine Learning [2022] One hot encoding takes a section which has categorical data, which has an existing label encoded and then divides the section into numerous sections. The volumes are rebuilt by 1s and 0s, counting on which section has what value. The one-hot encoder does not approve 1-D arrays. The input should always be a 2-D array.

Categorical Data Encoding with Sklearn LabelEncoder and OneHotEncoder As we discussed in the label encoding vs one hot encoding section above, we can clearly see the same shortcomings of label encoding in the above examples as well. With label encoding, the model had a mere accuracy of 66.8% but with one hot encoding, the accuracy of the model shot up by 22% to 86.74% Conclusion

Comparing Label Encoding And One-Hot Encoding With Python Implementation This will provide us with the accuracy score of the model using the one-hot encoding. It can be noticed that after applying the one-hot encoder, the embarked class is assumed as C=1,0,0, Q=0,1,0 and S= 0,0,1 respectively while the male and female in the sex class is assumed as 0,1 and 1,0 respectively. The code snippet is shown below

developers.google.com › machine-learning › glossaryMachine Learning Glossary | Google Developers Mar 04, 2022 · one-hot encoding. A sparse vector in which: One element is set to 1. All other elements are set to 0. One-hot encoding is commonly used to represent strings or identifiers that have a finite set of possible values. For example, suppose a given botany dataset chronicles 15,000 different species, each denoted with a unique string identifier.

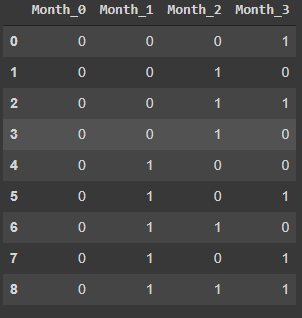

One Hot Encoding and Label Encoding - Data Science & Machine Learning Binary Encoding. Binary Encoding is similar to Label Encoding - we convert a number (from the Label Encoding) to the binary form. In a neural network, using Binary Encoding, the output layer would have exactly 2 output neurons. One Hot Encoding. In One Hot Encoding, we use as many columns as the number of classes we want to encode.

One hot encoding vs label encoding (Updated 2022) That answer depends very much on your context, however given that One Hot Encoding is possible to use across all machine learning models whilst the Label Encoding tends to only work best on tree based models, I would always suggest to start with One Hot Encoding and look at Label Encoding if you see a specific need.

Label Encoder vs. One Hot Encoder in Machine Learning What one hot encoding does is, it takes a column which has categorical data, which has been label encoded, and then splits the column into multiple columns. The numbers are replaced by 1s and 0s,...

Label encoding vs Dummy variable/one hot encoding - correctness? 1 Answer. It seems that "label encoding" just means using numbers for labels in a numerical vector. This is close to what is called a factor in R. If you should use such label encoding do not depend on the number of unique levels, it depends on the nature of the variable (and to some extent on software and model/method to be used.) Coding ...

One Hot Encoding VS Label Encoding | by Prasant Kumar - Medium Here we use One Hot Encoders for encoding because it creates a separate column for each category, there it defines whether the value of the category is mentioned for a particular entry or not by...

Encoding categorical columns - Label encoding vs one hot encoding for ... The ACCURACY SCORE of various models on train and test are: The accuracy score of simple decision tree on label encoded data : TRAIN: 86.46% TEST: 79.42% The accuracy score of tuned decision tree on label encoded data : TRAIN: 81.74% TEST: 81.33% The accuracy score of random forest ensembler on label encoded data: TRAIN: 82.26% TEST: 81.63% The...

One-Hot Encoding vs. Label Encoding.docx - UNCLASSIFIED... View One-Hot Encoding vs. Label Encoding.docx from DAT 8130 at Algonquin College. UNCLASSIFIED One-Hot Encoding vs. Label Encoding 1. What is Categorical Encoding? 2. Different Approaches to

Data Science in 5 Minutes: What is One Hot Encoding? One hot encoding makes our training data more useful and expressive, and it can be rescaled easily. By using numeric values, we more easily determine a probability for our values. In particular, one hot encoding is used for our output values, since it provides more nuanced predictions than single labels.

Post a Comment for "40 one hot encoding vs label encoding"